The True Peak situation

- Published: 2023-11-26 22:34

- Updated: 2023-11-28 15:26

This entire thing needs rework

I have to revisit the upsampling procedure, generate new files, run the tests again and collect new data. As the current procedure seems to be flawed. One of the joy's of working and learning in public, I guess :) Until further notice, only the results of plugins at 48khz allow for data-driven comparison.

I lately stumbled upon this video by Ian Shepherd/Production Advice about DSP Overshoots and Intersample/True Peaks. I strongly recommend watching it, he explains the topic very well!

My understanding of True Peak in a nutshell

'True Peak' metering exists to provide as specific information as possible about the 'true' signal our physical playback devices actually reproduce after conversion. Versus the pure, raw data of the digital signal inside the computer. To prevent potential distortion during conversion of any kind.

Trying to veri- or falsify some trust issues in metering plugins—and correlating with that—dynamics processors in general, I decided to do some testing. Starting with maximum True Peak detection.

Step 1: Create a test signal¶

To maximize the potential for misinterpretation, using mere whitenoise didn’t seem suitable to me. Instead, I followed the itch of making a sound covering the entire dynamic range of 64bit. To also check if bit depth reduction and dithering can have potential impacts on meter readings.

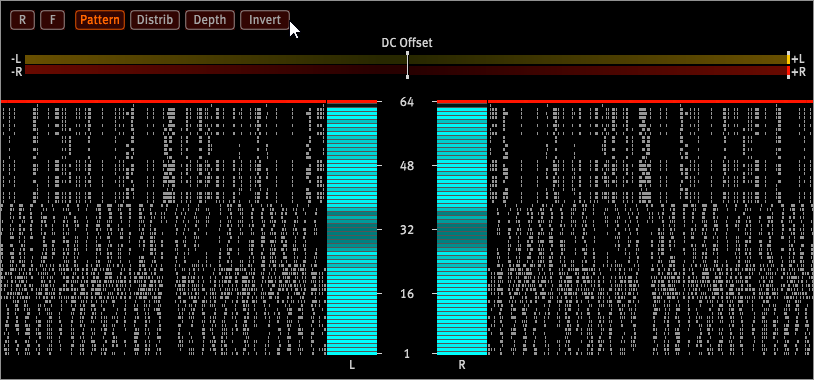

For which I used 2 instances of Bitwigs’ Test Tone generator, using dirac waves. Because they are terribly unmusical. And because I successfully used them for irritating dynamics processors already.

I summed them to -0.4db peak on BWs master. Both sporting randomly modulated pitches to allow for intermodulation distortion. -0.4db was a sensible choice by gut, in between 'not too much and not too little' headroom.

To fill the bits below, I used another instance using a volume ramped 2hz saw, set at -144db. And one using whitenoise at -244db.

Goal: create source material entailing a terrible peak structure across the entire dynamic range.

I used SPL Hawkeye’s Bit Depth meter to verify I’m using the full range. Every dot basically says “has signal within 6db range of this bit”.

I then recorded some cycles of the lovely mess using Melda Recorder in 32bit. At a sampling rate of 48khz. I then upsampled the original sound to 96, 192 and 384khz in Acoustica. In the most straight forward manner, without employing any algorithm. The idea: Increasing the sampling rate manifests estimated True Peaks into the sample as actual peaks. You can download the files, if you want to verify it yourself.

To establish a baseline for further testing:

Step 2: Collect baseline stats for comparison¶

For this, I’m loading the samples into some tools, and collect offline/non-realtime sample analytics. Which—I assume—should be 100% accurate when it comes to max sample peak data. And provide very accurate max True Peak level readings as well.

My expectation

If I understand the concept of True Peak analysis correctly, the ‘max True Peak level’ at 48khz should be able to predict the ‘max sample peak level’ of at least 96khz.

Here are my findings from

Acorn Acoustica¶

| Sample peak vs True Peak | 48khz | 96khz | 192khz | 384khz |

|---|---|---|---|---|

| Max sample peak level | -0.42 | +0.67 | +0.67 | +0.67 |

| Max True Peak level | +0.14 | +0.67 | +0.69 | +0.7 |

What a curious first result. If Acoustica’s True Peak prediction at 48khz was correct, I would have expected the sample peak at 96khz to be at +0.14. Instead it’s half a db above, at +0.67dbTP. That’s a first surprise.🤔

Meanwhile, a friend tested

Magix Samplitude¶

| Sample peak vs True Peak | 48khz | 96khz | 192khz | 384khz |

|---|---|---|---|---|

| Max sample peak level | -0.4 | +0.1 | +0.3 | +0.4 |

| Max True Peak level | -0.5 | +0.4 | +0.4 | +0.4 |

Even more curious: How can a True Peak be 0.1db lower than the max sample peak? 🤷 The rest of the data only correlates at 96khz max TP level with Acoustica. Interesting? Now I need to know what Izotope RX has to say about this. …installs the trial…

Izotope RX 10 Advanced (trial)¶

Since RX includes some stats about possibly clipped samples, I’ll include them as well:

| Sample peak vs True Peak | 48khz | 96hz | 192khz | 384khz |

|---|---|---|---|---|

| Max sample peak level | -0.42 | +0.67 | +0.67 | +0.67 |

| Max True Peak level | +0.03 | +0.72 | +0.72 | +0.7 |

| “Possibly clipped samples” | 0 | 379 | 738 | 751 |

At 48khz, the max True Peak level deviates from 96khz by 0.63db. Not only unexpected, but a lot of deviation. It’s interesting to see the amount of clipped samples almost double between 96 and 192khz.

lufs.org¶

I don’t know who’s behind this website. But reckon it’s frequently used for checking. Thus, should be checked as well.

| Sample peak vs True Peak | 48khz | 96hz | 192khz | 384khz |

|---|---|---|---|---|

| Max sample peak level | -0.42 | +0.67 | +0.67 | ? |

| Max True Peak level | -0.42 | +0.67 | +0.67 | ? |

Here, the max sample peak levels simply equal the True Peak levels. Thus, its max TP level detection fails at 48khz. The 384khz file caused the site to calculate endlessly, so I don’t have a result.

Offline sample statistics observations¶

Let’s put the results side by side for easier comparison:

| Sample statistics | @48khz max peak / dbTP | @96khz max peak / dbTP | @192khz max peak / dbTP | @384 max peak / dbTP |

|---|---|---|---|---|

| Acorn Acoustica | -0.42 / +0.14 | +0.67 / +0.67 | +0.67 / +0.69 | +0.67 / +0.7 |

| Izotope RX 10 Adv. | -0.42 / +0.03 | +0.67 / +0.72 | +0.67 / +0.72 | +0.67 / +0.7 |

| lufs.org | -0.42 / -0.42 | +0.67 / +0.67 | +0.67 / +0.67 | ? |

| Magix Samplitude | -0.4 / -0.5 | +0.1 / +0.4 | +0.3 / +0.4 | +0.4 / +0.4 |

Acoustica, Izotope and lufs.org are in rapport about the max sample peak levels. Let’s neglect Samplitude’s deviation of .02db at 48khz.

Contrarily—at 48khz—none of the max True Peak detectors did a reliable prediction of the max sample peak levels at 96khz. Samplitude doesn’t report any clippings at all.

Interestingly—at 192khz—Izotope RX reports a slightly higher max True Peak level than actually present at 384khz. Whilst having reported a higher number of possibly clipped samples at 384khz than 192khz.

I’m irritated by the results so far. If offline TP statistics deviate that much from 48khz to the truth of 96khz, my enthusiasm for accurate real-time results is curbed. This isn’t exactly the trustworthy baseline I was hoping for.

Let’s quickly check that against

DAW meters maximum sample peak levels¶

| DAW | @48khz | @96khz | 192khz |

|---|---|---|---|

| Ableton Live 10 | -0.42db | +0.67db | +0.67db |

| Bitwig 4 | -0.4db | +0.7db | +0.7db |

| Reaper 7 | -0.4db | +0.7db | +0.7db |

No surprises here. Since both, Bitwig and Reaper round their meters values to the first digit after the decimal. Nice!

Given that +0.67db max sample peak is a recurring pattern above 96khz, I’ll use it as the most educated target reference for testing VST3 plugins in Bitwig at 48khz.

Step 3: Realtime plugin test¶

The process should be as straight forward as this:

- Set DAW sample rate to according to the files’ sampling rate, to avoid resampling artefacts

- Insert file in DAW or editor of choice

- Set the track playing back the file to unity (0dbfs)

- Insert plugin on two-buss exclusively, to avoid any interference from further plugins

Results at 48, 96 and 192khz¶

I’m including the number of detected clippings © where reported. And have to neglect testing at 384khz, because it exceeds the capability of the audio interfaces available to me (UAD Apollo 8, RME Multiface, Motu M4).

The results are sorted according to predicted maximum True Peak levels from highest to lowest. In a perfect world, oversampling should allow them to be in the ballpark of +0.67dbTP at 48khz.

| Product | dbTP @48khz / c | dbTP @96khz / c | dbTP@192khz / c |

|---|---|---|---|

| FLUX::PureAnalyzer (SG-mode)* | +1.0 | +0.7 | +0.7 |

| TDR Limiter No6 GE | +0.5 | +0.7 | +0.7 |

| Voxengo Span Plus | +0.4 / 35 | +0.7 / 401 | +0.7 / 824 |

| Youlean Loudness Meter Pro | +0.3 / 15?* | +0.7 / 20?* | +0.7 / 20?* |

| Melda Loudness Analyzer | +0.11 | +0.62 | +0.67 |

| Goodhertz Loudness | +0.11 | ||

| DMG Trackmeter | -0.1 | ||

| Adptr Metric AB* | -0.1 | +0.7 | +0.7 |

| Adptr Streamliner* | -0.1 | +0.7 | +0.7 |

| SPL Hawkeye | -0.3 | +0.7 | +0.7 |

- Trial version used for testing

- I own licenses

Notable observations during testing¶

- (T) FLUX::PureAnalyzerSystem is a standalone program, offering two modes of operation:

- Standalone: monitoring the selected hardware output device

- In this mode, the max True Peak level did not exceed 0.0 dB

- SamplerGrabberV3: monitoring the input of a VST plugin via local network

- This mode was used to collect above data

- In this mode, the max TruePeak meter did not respond to using the reset button

- The automatic setup did not install the VST plugin properly, required manual copying of the plugin, incl. its folder tree of data for operation

- Standalone: monitoring the selected hardware output device

- Voxengo Span’s numerical True Peak clipping counter ‘feels’ most specific

- Youlean Loudness meter‘s True Peak clip history is the most actionable

- Allows for the most tangible before/after editing comparison

- Despite displaying fast, consecutive clippings as continuous bar

- Therefor, the number of clippings can not be interpreted precisely

- (T) Adptr Audio Metric AB’s True Peak meter doesn’t hold maximum True Peak values, neither tracks the highest value

- Thus requires constant, focused observation to spot max TP levels as they swiftly occur

- (T) Adptr Audio Streamliner requires interpretation of a red, 2 pixel bar with a single pixel measure to identify the True Peak level

- At 100% UI size, the result at 48khz can be interpreted as -0.2 or -0.1

- The UI requires resizing to at least 150% for highest accuracy

Step 4: Let’s try to draw conclusions¶

When using max +0.69dbTP level from the actual sample peaks of the 96khz file as baseline for 48khz results, a generous line beyond rounding errors must be drawn to interpret above results as useful. To serve as reliable source for further decision making processes.

Ironically, 48khz is the context where accuracy of True Peak meters matter the most. Because from 96khz upwards, the difference between max TP-, and sample peak levels potentially deviate by only 0.08 decibels. Which I interpret as acceptable rounding errors. In comparison with the correlating offline sample statistics as collected above (disregarding Samplitude’s readings).

What I learned¶

True Peak detection is more complicated than I deemed it to be. When it comes to DAWs, I very much understand the need for efficiency. Thus, the lack of TP detection. That’s why we have metering plugins, right?

I had some deviation-related trust issues. And it was useful to test my sanity. But: Exactly which of the above is actually accurate? 🤷

Span’s readings are closest to my impression of ‘actual signal integrity’. And TDR Limiter no6 GE pins it.

I also would have expected professional sample editors to be as accurate as possible.

Lastly: Voxengo Span’s plaintext statistic make its readings tangible. I wish more meters would provide the number of detected True Peaks above unity gain. Because:

What does ‘professional’ even mean in the scope of music production?¶

To me, it’s a process yielding reproduceable results in the upmost reliable manner. AES, EBU etcpp imply standards. None of which seem to have weight in the scope of TP metering and detection. And are barely reduced to marketing terms abusing a degree of authority for the sake of suggesting ‘professional’.

The standard as defined by the ITU is fuzzy at best (.pdf). And dates back to 03/2011. It doesn’t take the systems sampling rate into credit, nor requires a specific amount of oversampling. Or recommends a specific approach to the implementation.

How much does a deviation of 1db even matter?¶

As usual, this depends on many factors. From source material to level of processing. Those predominantly working with recorded material and employ rather subtle levels of processing at 96khz and above may shrug what this fuzz is about.

For me, as sound designer and electronic musician, who loves exploring extremes whilst trying to maintain an overall organic sound aesthetic inside the DAW, it’s much more challenging. It’s fairly easy to create sounds that defy the laws of physics by linearly ramping up envelopes from -inf to full scale from one sample to the next.

Add aliasing artefacts as result of saturating modulated signals above 4khz to complex, intertwined and cascading processing chains, and the situation becomes unpredictable very quickly. Whereas this test revealed a deviation of just 1db, I already encountered differences up to 3db in real-world scenarios. I’ll update this document once I run into such a situation again.

Moreso, letting above project play for 5 minutes made Span indicate occasional True Peak values of up to +2.7db. Not sure about you, but I don’t babysit my meters during the creative process. I sample them when necessary. And potentially miss a lot of what’s really going on in my signal chains.

To go further: if dedicated products aimed at detecting the True Peak readings of a signal already fail at being precise: what does this mean for the average envelope follower in a dynamics processor?

And what does this imply about the general notion that oversampling fixes everything?

HELP. 🤷

Full disclosure¶

I’m trying, to the best of my abilities and biases, to work through this topic as data-driven and transparent as possible. I also consider this to be a living document. Following the tradition of “working with the garage door up”.

If you find a mistake, spot a flawed train of thought, something lacking clarification or anything else: let me know! I’m not a programmer and can’t write code. My understanding of DSP is based on personal observation, personal experience, curious rabbit-holing, and abstracting conversations with developers to the level of my comprehension. Which I neither claim to be complete, nor objective.

I’m affiliated with AudioRealism.se: as external set of ears, and helping hand in the scope of product development, betatesting and sound-design. As a sound-, UX-designer and betatester contributed commercially, as well as non-commercially to products by Ableton, Bitwig, Boom Library, Cytomic, Native Instruments, Polac, Plogue, SmartElectronics, SoundTheory, SurrealMachines, and XLN Audio. Both under NDA as well as mutual trust. At the point of writing, I’m involved in the development of a non-commercial plugin by Variety of Sound.

About money and software licenses¶

I did not receive payment, nor free/NFR licenses for the scope of this test. And either purchased licenses of the tested products in the past from my own money (see below). Or use trial versions for the purpose of this research, which are marked accordingly.

I own personal licenses for Acorn Acoustica Standard Edition, Melda Loudness Analyzer, SPL Hawkeye, TDR Limiter No6 GE, Voxengo Span Pro and Youlean Loudness Meter Pro.

In the past, I received NFR licenses of Ableton Live 10 and Bitwig Studio 4 for the purpose of testing AudioRealism products, as well as creating factory content. I’m not using Reaper 7 in my personal projects. It is still in evaluation mode.

Motivation and conflict of internal interest¶

This research is the rooted in me trying to scratch my own itch. I seriously started producing music at home using Protracker on the Amiga during the early 90s. Whilst also working on projects at a friends fully featured hardware studio.

The more I learned about hardware-based processes and strategies, the more I tried applying them to my software-rooted bedroom studio approach.

As time progressed, realtime synthesis and VST plugins became the standard, the gaps between the hardware- and software-based production narrowed. Down to subtle differences in sound aesthetics. That often defied the gravity of specific definition.

At the same time, I also realized that the processes and strategies to achieve a raw, at the same time organic sound aesthetic deviated between hardware and software-based setups. And—honestly: it became my obsession. Because I had, and still have the impression, that my processes and strategies in an exclusively digital environment contain twice as many steps. Compared with working purely hardware-based. Which collides with my love for working as efficiently and effectively as possible.

With upmost and sincere respect for every product developer out there:

I firmly believe in technological progression. Especially in the field of creative tools. And have trouble accepting the status quo. I also believe of engineering processes driven by the human awareness for details. Instead of externalizing it to machine learning, or no-brainer implementations like oversampling as band-aid to magically resolve issues. Having contributed to products and projects, that even I deemed impossible to realize, I believe the devil being in the details.

At the same time, I very much understand the nature of a competitive market. Being a UX-designer for web projects during the rise of SEO taught me a lot about the diffusion of innovation. Hashtag Dark UX patterns becoming bespoke best practices.

A call for participation¶

The test is easily reproduceable. If your meter or product isn’t included in the test: download the files and test yourself. If you don’t trust my results, download the files and test yourself. Even cooler: please share your results with me, so I can include them in the list.

You can find me here:

Thanksgiving¶

- Joachip/robotplanet.dk for checking Samplitude, helping me digest complex matter, keeping me on track, and supporting me with valuable questions and DSP insights since 20 years, and your patience despite me being a challenging anally retentive prick at times 💖

- Stephan/Vapnik Sound for testing DMG Trackmeter, Ghz Loudness and Metric AB, as well as your patience and trust in me rabbit-holing this stuff for reasons, at times beyond my rational comprehension

- Herbert/VoS for your curiosity and trust in my perspective, as well as supporting my hunch with further research and conversations